It can be done, but I'd start with the 'read-entire-file-to-memory. The next 420x360x3 bytes afer that will represent the second frame, etc. It's also possible to do a streaming approach where you read a little bit of the file from S3, pump it into ffmpeg, and then stream the upload piece by piece, but it's a bit harder because you either have to use non-blocking IO or multiple threads/greenlets (e.g. If the video has a size of 420x320 pixels, then the first 420x360x3 bytes outputed byįFMPEG will give the RGB values of the pixels of the first frame, line by line, top to bottom.

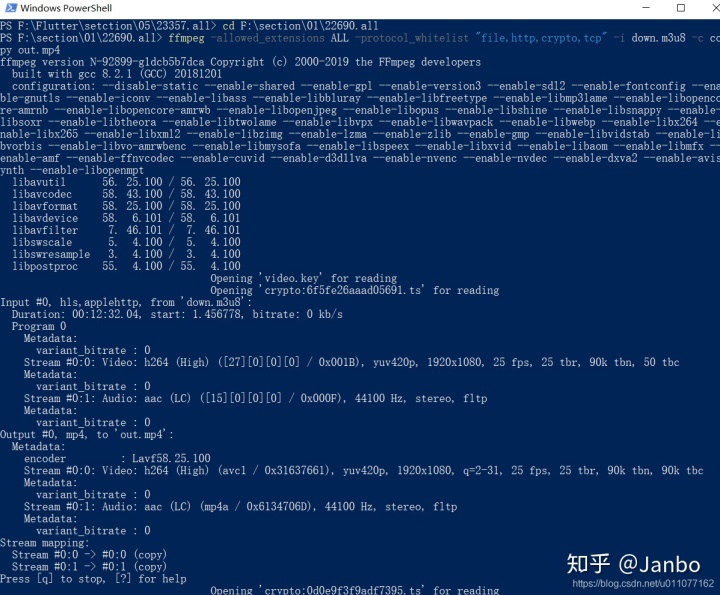

Later I will make something a little more complex out of this ffmpeg command. Now we just have to read the output of FFMPEG. You can make this a little more useful (or you can just run the code in the cmd). From an FFmpeg supported resource You can pass a local path of video (or a supported resource) to the input method: video ffmpegstreaming. It can be omitted most of the time in Python 2 but not in Python 3 where its default value is pretty small. import ffmpegstreaming Opening a Resource There are several ways to open a resource. In sp.Popen, the bufsize parameter must be bigger than the size of one frame (see below). The format image2pipe and the - at the end tell FFMPEG that it is being used with a pipe by another program. In the code above -i myHolidays.mp4 indicates the input file, while rawvideo/rgb24 asks for a raw RGB output. Import subprocess as sp command = pipe = sp.

0 kommentar(er)

0 kommentar(er)